Do interfaces terminate dependencies?

Response to a response.

A previous post explored the relationship between transitive dependencies and abstract methods in Java programs. Jaime Metcher penned an excellent criticism of the post on his blog, where he concludes:

So I suspect that what Edmund has discovered is a correlation between the use of interfaces and modular program structure. But that just a correlation.

This is entirely correct and thank you, Jaime, for the clarification.

Jaime also makes an even more interesting comment:

Finally, the notion that interfaces act as a termination point for dependencies seems a little odd. An interface merely represents a point at which a dependency chain becomes potentially undiscoverable by static analysis. Unquestionably the dependencies are still there, otherwise your call to that method at run-time wouldn’t do anything.

This blog has twice tried to understand how interfaces relate to structure and the above sharply defines the challenge.

So: do interfaces terminate dependencies?

There are two reasons why a programmer might believe that they do.

Firstly, programmers study structure for many reasons, one being cost analysis.

In a poorly structured program, changes to code in one place lead to changes in many others (the dreaded ripple effect), thus increasing the cost of feature introduction and modification. A well-structured system simplifies such cost prediction - and can often reduce costs - as programmers can easily see how the various parts depend upon one another.

And this is almost entirely a matter of text.

Source code is text. Programmers update code by updating text. Ripple-effects are beasts of textual relationship. That text structure may or may not survive into run-time but programmers rarely splice byte-code to save costs.

It is in this textual sense that interfaces terminate dependencies: they terminate textual dependencies. A client class with a text dependency on interface A has no text dependency on whatever class implements A.

Of course, the implementation might change thus forcing a change on the interface, and this will ripple-back to the client class, and this brings us to our second point

The second reason why interfaces terminate dependencies is: programmer intent.

Programmers use abstraction to allow a degree of change beyond that provided by concrete dependencies. When a client class must depend on two others of widely different behaviour, then so be it. But if it depends on multiple similar behaviours, and the programmer knows that more such behaviours are chomping at the backlog, then that programmer may chose to extract an interface to capture that similar behaviour, thus reducing the client class's exposure, and hence ripple effects, to its service providers.

The interface becomes a crackling neon sign powered by the programmer's intent, reading, "Look! Here's a place where there's a reduced probability of ripple-effect because I think all these behaviours will be the same and we can add more without affect the client!"

It's that pesky cost model, again.

You are free to model your potential update costs any way you like, including modeling interfaces as non-terminating for dependencies and hence having client classes depend on all the implementations behind the interface. But then your cost model will ignore precisely those neon signs where the programmer has tried to reduce ripple-effect costs: your cost model will overestimate.

Of course, an even worse scenario would see you luxuriate in the terminating-interfaces cost model yet using abstraction so badly that ripple-effect cost is not, in practice, reduced: then your model will underestimate costs.

The point of both arguments is that focusing on source code structure, ignoring run-time structure, often proves worthwhile because it's in the source-code arena where programmers battle costs.

Where's the proof?

But hang on. Where's the evidence for all this? Surely so powerful a claimed mechanism would leave some trace on our programs. How do we even know that programmers use interfaces as points of reduced ripple-effect probability?

To answer this is easy. Just interview all developers on a large project, reviewing all of the tens of thousands of methods over all releases throughout the project's entire lifetime, identifying which methods changed, identifying which changes rippled up to change the calling method either directly or via an interface, count the percentages of both, and if you can show that fewer clients were impacted via interfaces than direct dependencies, then you've shown a cost model that goes some way to validating that interfaces terminate dependencies.

And then repeat the process with five other large projects to get a good statistical sample of hundreds of thousands of methods.

Ehhm: no.

What we can do is fire up a parser that can identify method changes in consecutive releases of various software projects. Of course, being automated, it cannot identify when a change to, say, an implementation caused a change to its changed interface client: those two changes could have happened independently. Still, we expect to see some correlation through the noise.

Ideally, we would like any evidence to take the form of two graphs, say red and blue.

Presenting percentages rather than absolute values (programs have many more concrete classes than interfaces, and so merely showing fewer interface-mediated changes than direct would be too weak), the red line would show the number of changed methods in each release whose calling methods also changed, as a percentage of the total number of changed methods. This would suggest ripple-effects acting along direct concrete dependencies.

The blue line would show the number of changed client methods calling an interface method when any of that method's implementations also changed, as a percentage of total number of changed implementation methods. This would suggest ripple-effects acting through interfaces.

The hypothesis that, "Interfaces terminate dependencies," would then demand simply that the red line be higher than the blue line for the vast majority of the projects' lifetimes.

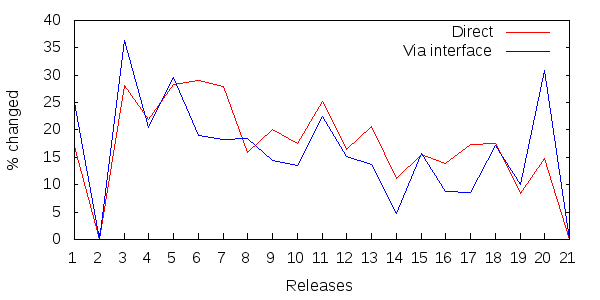

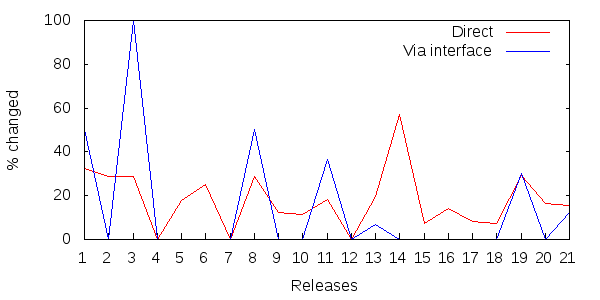

Figure 1 shows 22 consecutive releases of the Spring core jar file.

Figure 1: Ripple-effects in releases 0.9.0 - 3.2.2 of Spring.

17 of the 21 data points show more direct ripple-effects than via dependencies.

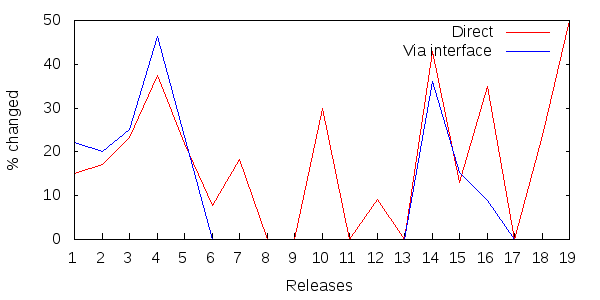

Figure 2 shows 20 consecutive releases of Struts.

Figure 2: Ripple-effects in releases 1.0.2 - 2.3.8 of Struts.

15 of the 19 data points show more direct ripple-effects than via interfaces.

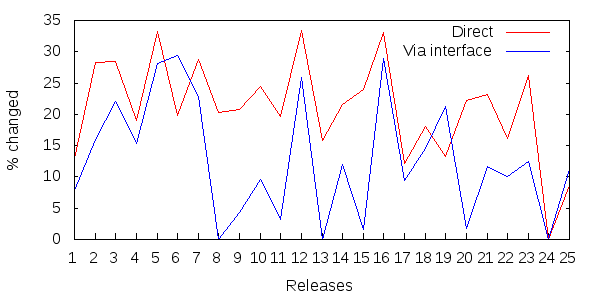

Figure 3 shows 26 consecutive releases of the Apache Lucene core jar file.

Figure 3: Ripple-effects in releases 1.9 - 4.3.0 of Lucene core.

Perhaps the clearest evidence of the set.

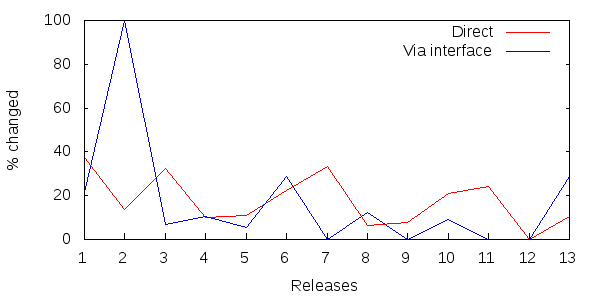

Figure 4 shows 14 consecutive releases of JUnit.

Figure 4: Ripple-effects in releases 3.7 - 4.11 of JUnit.

Bit of a mixed bag with JUnit, but 9 of the 13 data points show more direct ripple-effects than via interfaces.

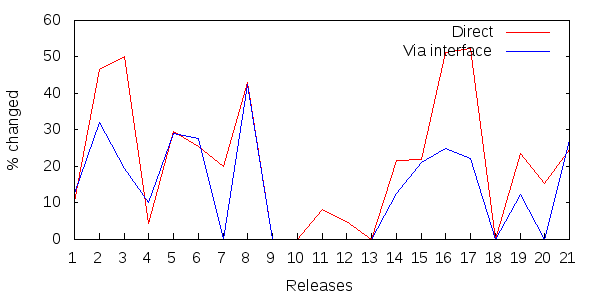

Figure 5 shows 22 consecutive releases of the Apache Maven core jar file.

Figure 5: Ripple-effects in releases 1.0.0 - 3.3.9 of Apache Maven.

17 of the 21 data points show more direct ripple-effects than via interfaces.

Figure 6 shows 22 consecutive releases of the Apache log4j core jar file.

Figure 6: Ripple-effects in releases 1.0.4 - 2.4.1 of Apache Maven.

18 of the 21 data points show more direct ripple-effects than via interfaces.

Not that any of this proves anything.

There are so many threats to the validity of this hypothesis that this post is little more than a doodle on the back of a digital envelope. The data seems to posit the existence of a correlation - as Jaime noted - but does not prove it.

And once more - as Jaime also noted - this should not encourage you to pack your project with vapid interfaces to reduce the cost of ripple-effects. Abstraction must be used wisely. Indeed, this correlation - should it exist - would help identify meaningless abstraction, as the adding more interfaces without maintaining the correlation would indicate abstractions not pulling their weight.

"The correlation between direct ripple-effects and ripple-effects via an interface," is a bit of a mouthful, however, and if further work demonstrated this correlation with statistical rigour, it would deserve a name.

So let's call it, "The Metcher Correlation," unless Jaime objects.

Summary.

That, "Interfaces terminate dependencies," is not really right or wrong, it's an assumption in a software development cost model. If you make that assumption, you get one cost model; if you don't, you get another. The question is: which cost model is more accurate?

If you find that modeling interfaces as dependency-terminating more accurately reflects the realities of your code then make the assumption that, "Interfaces terminate dependencies."

If you don't, don't.

PS All raw data and a rather sad addition to humanity's pile of tedious parsers will be available until Feb 2016 here.